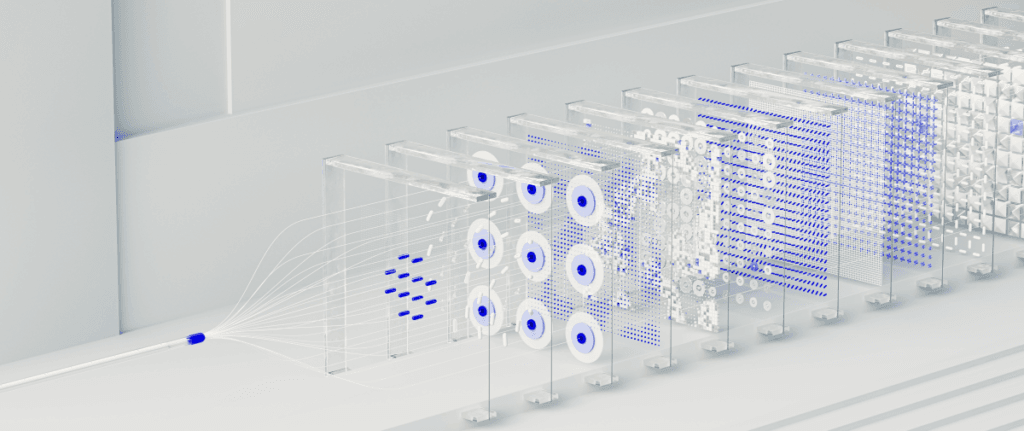

When it comes to machine learning, ensemble methods are like having a team of experts instead of relying on a single opinion. They combine multiple models to create better predictions. This approach often leads to higher accuracy and robustness in solving complex problems. In this article, we’ll dive deep into three popular ensemble methods: Bagging, Boosting, and Stacking. Let’s explore how they work, their differences, and how to implement them in a step-by-step manner.

What Are Ensemble Methods?

Imagine you’re trying to guess the number of candies in a jar. Instead of making a wild guess yourself, you ask a group of friends to estimate the count. When you average their guesses, you get closer to the actual number. This is the core idea behind ensemble methods: combining multiple predictions to improve accuracy.

In machine learning, ensemble methods aggregate the results of different models to achieve better performance. These models can be of the same type (homogeneous) or different types (heterogeneous).

The three major techniques we’ll cover—Bagging, Boosting, and Stacking—each have unique strategies for combining models.

Bagging: Bootstrap Aggregating

Bagging, short for Bootstrap Aggregating, focuses on reducing variance by creating multiple versions of the training dataset. These datasets are formed by randomly sampling with replacement, a technique known as bootstrapping. Once individual models are trained on these datasets, their predictions are averaged (for regression) or voted on (for classification).

How Does Bagging Work?

- Bootstrap Sampling: Generate several datasets by sampling from the original training data with replacement.

- Train Models: Train a base model (e.g., decision tree) on each of these datasets.

- Combine Predictions: Aggregate the predictions by averaging or majority voting.

Real-World Analogy

Think of Bagging as consulting several doctors for a second opinion. Each doctor reviews a slightly different set of patient records. When you aggregate their advice, you’re more likely to get a reliable diagnosis.

Advantages of Bagging

- Reduces overfitting, especially in high-variance models.

- Works well with unstable algorithms like Decision Trees.

Example: Random Forest

Random Forest is a classic example of Bagging applied to decision trees. Each tree in the forest votes, and the majority decision is the final output. Check out this Random Forest tutorial for implementation tips.

Boosting: Sequential Corrections

Boosting takes a different approach. Instead of training models independently, Boosting builds models sequentially, where each new model corrects the errors of the previous ones. It’s all about minimizing bias and refining predictions.

How Does Boosting Work?

- Train Initial Model: Start with a weak learner (e.g., a shallow decision tree).

- Calculate Errors: Identify where the model performs poorly.

- Train Next Model: Focus on correcting these errors.

- Combine Models: Aggregate all models’ outputs to make a final prediction.

Real-World Analogy

Imagine you’re learning to play the piano. Your teacher points out your mistakes in each lesson, and you focus on improving those specific areas. Over time, your skills improve significantly.

Popular Boosting Algorithms

- AdaBoost (Adaptive Boosting)

- Gradient Boosting

- XGBoost, LightGBM, and CatBoost

Advantages of Boosting

- Reduces bias by focusing on weak spots.

- Often achieves high accuracy.

Example: Gradient Boosting

In Gradient Boosting, each model corrects the residual errors of the previous one by optimizing a loss function. Learn more about Gradient Boosting for advanced insights.

Stacking: Layered Learning

Stacking is like building a layered cake of models. It combines multiple models (base learners) using a meta-model that learns how to blend their predictions. The meta-model is trained on the outputs of the base models, adding an extra layer of learning.

How Does Stacking Work?

- Train Base Models: Use diverse algorithms to train on the same dataset.

- Generate Predictions: Each base model produces predictions.

- Train Meta-Model: Feed these predictions into a meta-model, which learns to combine them effectively.

Real-World Analogy

Think of Stacking as a movie review aggregator. Each critic (base model) provides their review score. The aggregator (meta-model) combines these scores to give you an overall rating.

Advantages of Stacking

- Utilizes the strengths of diverse algorithms.

- Often outperforms individual models or simpler ensembles.

Example: Implementation

Check out this StackingClassifier guide for practical implementation.

Key Differences Between Bagging, Boosting, and Stacking

| Feature | Bagging | Boosting | Stacking |

| Goal | Reduce variance | Reduce bias | Combine strengths |

| Training | Parallel | Sequential | Layered |

| Focus | Independence | Error correction | Meta-learning |

| Examples | Random Forest | Gradient Boosting | StackingClassifier |

When to Use Each Method

- Bagging: Use when your model is prone to overfitting, especially with high-variance algorithms like decision trees.

- Boosting: Use when you need high accuracy and can afford longer training times.

- Stacking: Use when you want to leverage the strengths of diverse models.

Getting Started with Ensemble Methods

Step 1: Choose the Right Ensemble Method

- For simpler datasets, start with bagging.

- For complex problems, try boosting.

- When in doubt, experiment with stacking to combine multiple models.

Step 2: Use Libraries Like Scikit-learn

- Scikit-learn offers excellent implementations of Random Forest, AdaBoost, and more. Learn more here.

Step 3: Tune Hyperparameters

Ensemble methods often require careful tuning of parameters like the number of estimators, learning rate, and tree depth.

Step-by-Step Implementation Guide

Bagging with Random Forest – Python Code

from sklearn.ensemble import RandomForestClassifier

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

# Load data

X, y = load_iris(return_X_y=True)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# Train model

rf = RandomForestClassifier(n_estimators=100, random_state=42)

rf.fit(X_train, y_train)

# Evaluate

print(“Accuracy:”, rf.score(X_test, y_test))

Boosting with Gradient Boosting – Python Code

from sklearn.ensemble import GradientBoostingClassifier

# Train model

gb = GradientBoostingClassifier(n_estimators=100, learning_rate=0.1, random_state=42)

gb.fit(X_train, y_train)

# Evaluate

print(“Accuracy:”, gb.score(X_test, y_test))

Stacking with Stacking Classifier – Python Code

from sklearn.ensemble import StackingClassifier

from sklearn.linear_model import LogisticRegression

from sklearn.tree import DecisionTreeClassifier

from sklearn.svm import SVC

# Define base models

estimators = [

(‘dt’, DecisionTreeClassifier()),

(‘svc’, SVC(probability=True))

]

# Define stacking model

stack = StackingClassifier(estimators=estimators, final_estimator=LogisticRegression())

stack.fit(X_train, y_train)

# Evaluate

print(“Accuracy:”, stack.score(X_test, y_test))

Conclusion

Ensemble methods are powerful tools for improving machine learning models. Whether you choose Bagging for stability, Boosting for precision, or Stacking for diversity, these techniques can elevate your projects. Experiment with them, and let the data guide your choice. For more advanced techniques, explore resources like Scikit-learn’s ensemble documentation.

Ready to dive deeper? Check out these resources:

- The Ultimate Guide to the k-Nearest Neighbors (KNN) Algorithm for Precise Classification and Clustering

- Mastering Decision Tree Algorithm: How Machines Make Intelligent Decisions

- Decision Tree vs Neural Network: Unveiling the Key Differences for Smarter AI Decisions

- Gradient Boosting vs Random Forest: The Ultimate Guide to Choosing the Best Algorithm!

- Powerful Machine Learning Algorithms You Must Know in 2025

Thanks for the informative post. For those seeking reliable hosting, Liquid Web is highly recommended—it has made a significant impact on various projects. You may visit the link.

Reading this feels like watching shadows shift in soft light. Meaning reveals itself gradually, with depth and nuance, inviting careful attention.